Microsegmentation implementation

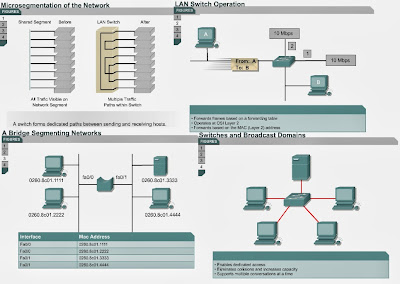

4.3.6 This page will explain the functions of a switch in a LAN due to microsegmentation.

LAN switches are considered multi-port bridges with no collision domain, because of microsegmentation. Data is exchanged at high speeds by switching

the frame to its destination. By reading the destination MAC address Layer 2

information, switches can achieve high-speed data transfers, much like a bridge

does. This process leads to low latency levels and a high rate of speed for

frame forwarding.

Data is exchanged at high speeds by switching

the frame to its destination. By reading the destination MAC address Layer 2

information, switches can achieve high-speed data transfers, much like a bridge

does. This process leads to low latency levels and a high rate of speed for

frame forwarding.

Ethernet switching increases the bandwidth available on a network. It does this by creating dedicated network segments, or point-to-point connections, and connecting these segments in a virtual network within the switch. This virtual network circuit exists only when two nodes need to communicate. This is called a virtual circuit because it exists only when needed, and is established within the switch.

Even though the LAN switch reduces the size of collision domains, all hosts connected to the switch are still in the same broadcast domain. Therefore, a broadcast from one node will still be seen by all the other nodes connected through the LAN switch.

Switches are data link layer devices that, like bridges, enable multiple physical LAN segments to be interconnected into a single larger network. Similar to bridges, switches forward and flood traffic based on MAC addresses. Because switching is performed in hardware instead of in software, it is significantly faster. Each switch port can be considered a micro-bridge acting as a separate bridge and gives the full bandwidth of the medium to each host.

The next page will discuss collisions.

4.3.6 This page will explain the functions of a switch in a LAN due to microsegmentation.

LAN switches are considered multi-port bridges with no collision domain, because of microsegmentation.

Ethernet switching increases the bandwidth available on a network. It does this by creating dedicated network segments, or point-to-point connections, and connecting these segments in a virtual network within the switch. This virtual network circuit exists only when two nodes need to communicate. This is called a virtual circuit because it exists only when needed, and is established within the switch.

Even though the LAN switch reduces the size of collision domains, all hosts connected to the switch are still in the same broadcast domain. Therefore, a broadcast from one node will still be seen by all the other nodes connected through the LAN switch.

Switches are data link layer devices that, like bridges, enable multiple physical LAN segments to be interconnected into a single larger network. Similar to bridges, switches forward and flood traffic based on MAC addresses. Because switching is performed in hardware instead of in software, it is significantly faster. Each switch port can be considered a micro-bridge acting as a separate bridge and gives the full bandwidth of the medium to each host.

The next page will discuss collisions.